ORIGINAL

Comparative Study of AI Code Generation Tools: Quality Assessment and Performance Analysis

Estudio Comparativo de Herramientas de Generación de Código por IA: Evaluación de Calidad y Análisis de Desempeño

Michael Alexander Florez Muñoz1 *, Juan Camilo Jaramillo De La Torre1 *, Stefany Pareja López1 *, Stiven Herrera1 *, Christian Andrés Candela Uribe1 *

1Universidad del Quindío. Colombia.

Cite as: Florez Muñoz MA, Jaramillo De La Torre JC, Pareja López S, Herrera S, Candela Uribe CA. Comparative Study of AI Code Generation Tools: Quality Assessment and Performance Analysis. LatIA. 2024; 2:104. https://doi.org/10.62486/latia2024104

Submitted: 04-02-2024 Revised: 10-05-2024 Accepted: 15-08-2024 Published: 16-08-2024

Editor: Prof.

Dr. Javier González Argote ![]()

ABSTRACT

Artificial intelligence (AI) code generation tools are crucial in software development, processing natural language to improve programming efficiency. Their increasing integration in various industries highlights their potential to transform the way programmers approach and execute software projects. The present research was conducted with the purpose of determining the accuracy and quality of code generated by artificial intelligence (AI) tools. The study began with a systematic mapping of the literature to identify applicable AI tools. Databases such as ACM, Engineering Source, Academic Search Ultimate, IEEE Xplore and Scopus were consulted, from which 621 papers were initially extracted. After applying inclusion criteria, such as English-language papers in computing areas published between 2020 and 2024, 113 resources were selected. A further screening process reduced this number to 44 papers, which identified 11 AI tools for code generation. The method used was a comparative study in which ten programming exercises of varying levels of difficulty were designed and the results obtained from 4 of them are presented. The identified tools generated code for these exercises in different programming languages. The quality of the generated code was evaluated using the SonarQube static analyzer, considering aspects such as safety, reliability and maintainability. The results showed significant variations in code quality among the AI tools. Bing as a code generation tool showed slightly superior performance compared to others, although none stood out as a noticeably superior AI. In conclusion, the research evidenced that, although AI tools for code generation are promising, they still require a pilot to reach their full potential, giving evidence that there is still a long way to go.

Keywords: Artificial Intelligence: Coding Assistants; Code Generation.

RESUMEN

Las herramientas de generación de código con inteligencia artificial (IA) son cruciales en el desarrollo de software, procesando lenguaje natural para mejorar la eficiencia en la programación. Su creciente integración en diversas industrias destaca su potencial para transformar la manera en que los programadores abordan y ejecutan proyectos de software. La presente investigación se realizó con el propósito de determinar la precisión y calidad del código generado por herramientas de inteligencia artificial (IA). El estudio comenzó con un mapeo sistemático de la literatura para identificar las herramientas de IA aplicables. Se consultaron bases de datos como ACM, Engineering Source, Academic Search Ultimate, IEEE Xplore y Scopus, de donde se extrajeron inicialmente 621 artículos. Tras aplicar criterios de inclusión, como artículos en inglés de áreas de la computación publicados entre 2020 y 2024, se seleccionaron 113 recursos. Un proceso de tamizaje adicional redujo esta cifra a 44 artículos, que permitieron identificar 11 herramientas de IA para la generación de código. El método utilizado fue un estudio comparativo en el que se diseñaron diez ejercicios de programación con diversos niveles de dificultad de los cuales se presentan los resultados obtenidos de 4 de ellos. Las herramientas identificadas generaron código para estos ejercicios en diferentes lenguajes de programación. La calidad del código generado fue evaluada mediante el analizador estático SonarQube, considerando aspectos como seguridad, fiabilidad y mantenibilidad. Los resultados mostraron variaciones significativas en la calidad del código entre las herramientas de IA. Bing como herramienta de generación de código mostró un rendimiento ligeramente superior en comparación con otras, aunque ninguna destacó como una IA notablemente superior. En conclusión, la investigación evidenció que, aunque las herramientas de IA para la generación de código son prometedoras, aún requieren de un piloto para alcanzar su máximo potencial, dando a evidenciar que aún queda mucho por avanzar.

Palabras clave: Inteligencia Artificial; Asistentes de Codificación; Generación de Código.

INTRODUCCIÓN

Artificial intelligence (AI) has experienced exponential development in recent decades, transforming virtually every aspect of our lives (Finnie-Ansley et al., 2022; Lee, 2020). From virtual assistants to recommender systems, AI has demonstrated its ability to process and analyze large amounts of data (Gruson, 2021; Ruiz Baquero, 2018), identify complex patterns, and make informed decisions. Thus, it has become a fundamental tool in various fields (Yadav & Pandey, 2020).

One of the most promising fields in which AI has a significant impact is the generation of functional and efficient software code (Yan et al., 2023; Hernández-Pinilla & Mendoza-Moreno, n.d). The importance of code generation using AI lies in its ability to save time and effort (Marar, 2024; Koziolek et al., 2023) while speeding up the software development process and reducing the possibility of human error (Chemnitz et al., 2023; Alvarado Rojas, 2015). In addition, these tools can help understand and learn new programming languages, which is particularly useful for novice developers (Llanos et al., 2021; De Giusti et al., 2023).

Code generation using AI involves using machine learning models and algorithms to create, modify, or improve the source code of a computer program (Wolfschwenger et al., 2023).

(Wolfschwenger et al., 2023; Azaiz et al., 2023). This technology can potentially revolutionize software development by speeding up the coding process, improving code quality, and facilitating collaboration between developers (Pasquinelli et al., 2022; Tseng et al., 2023).

This study focuses on evaluating the accuracy and quality of code generated by 11 of the AI platforms. The process includes code generation to solve ten tests with an incremental difficulty level. Subsequently, the resulting codes are evaluated using static code analysis tools. The objective is to compare the performance of the platforms and select the most representative and capable of generating functional and quality code.

This paper has the following sections: methods, results, conclusions, and bibliography.

METHOD

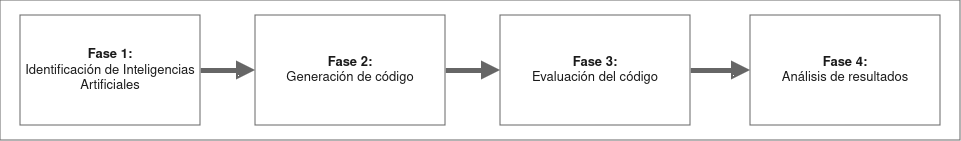

The research was carried out in four main phases: identification of artificial intelligences, code generation, code evaluation and analysis of results.

Figure 1. Phases of the process for the construction of the SMS

Phase 1: identification of Artificial Intelligences

The main objective of this phase is to define the artificial intelligence-based tools that will be used to perform the code generation, therefore, a systematic mapping of the literature to identify artificial intelligence capable of code generation (Macchi & Solari, 2012; Carrizo & Moller, 2018; Kitchenham et al., 2010). A search was conducted in several databases, including ACM, Engineering Source, Academic Search Ultimate, IEEE Xplore, and Scopus, resulting in 621 relevant scholarly articles and online resources. After applying inclusion criteria based on articles and proceedings in English about the areas of engineering and computer science published between 2020 and 2024, the total number of resources was reduced to 113. Then, a screening process was performed, resulting in 44 articles of interest, which allowed the identification of the 11 artificial intelligences for code generation: CodeGPT, Replit, Textsynth, Amazon CodeWhisperer, Bing, ChatGPT, Claude, Codeium, Gemini, GitHub Copilot and Tabnine.

Phase 2: code generation

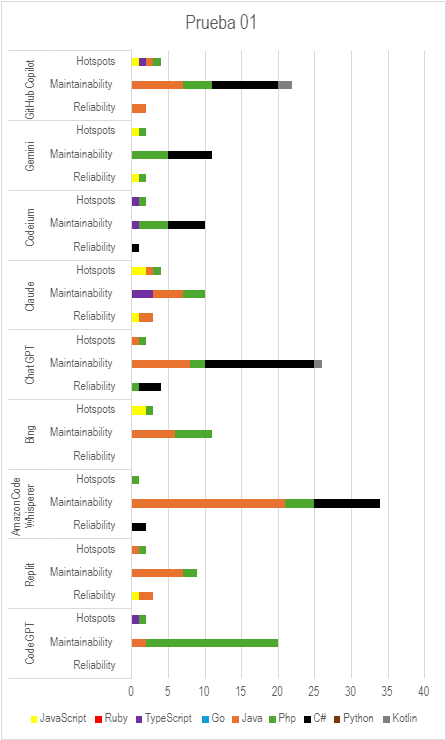

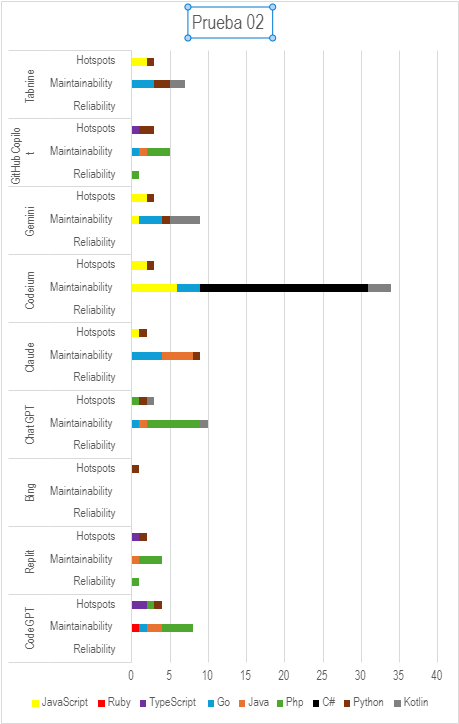

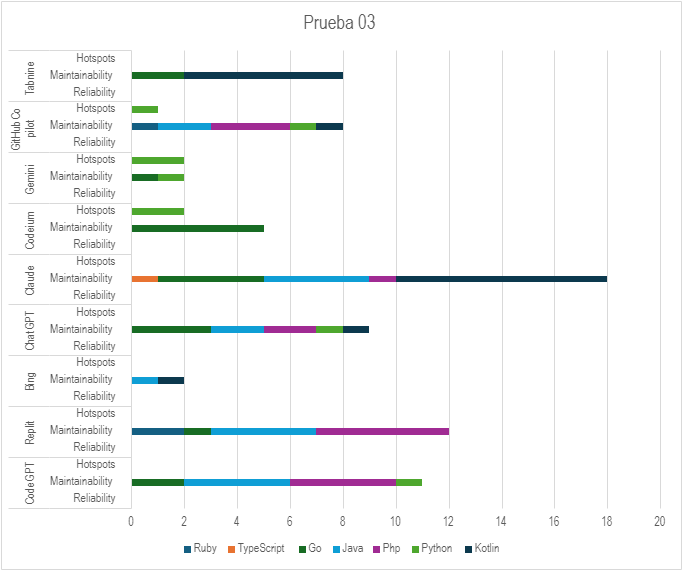

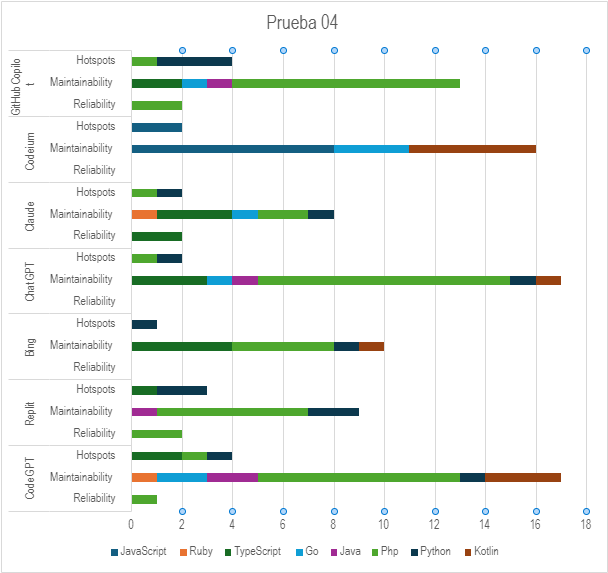

The objective of this phase is that the previously selected tools were tested, for which ten programming exercises were designed with different difficulty levels, covering a wide range of tasks and concepts. The seminar teacher elaborated on these exercises: Assistants for code generation. Subsequently, the 11 identified code-generating tools were asked to solve these exercises in different programming languages, such as PHP, Python, Java, JavaScript, TypeScript, C#, Kotlin, Go, and Ruby.

The exercises designed for evaluation include: 1. sort a set of elements, 2. search for an element within a set, 3. count the occurrences of a specific element, 4. register users, 5. implement a REST API for user management (CRUD), 6. Develop a GUI for this user management CRUD, 7. perform unit testing for the REST API, 8. perform testing for the user management GUI, 9. implement a REST API for user management with token validation, and 10. create a GUI for this protected CRUD.

Phase 3: code evaluation

The main objective of this phase is to determine the quality of the code generated by each of the tools in the different languages, taking into account the effectiveness and reliability of each tool in terms of adherence to good programming practices and code performance optimization. To carry out those mentioned above, a review was performed by the students using a static code analyzer (Jenkins and SonarQube), which allowed different tests to verify the quality of the code, taking into account aspects of security, reliability, and maintainability of the code, in order to subsequently determine which artificial intelligence has higher quality when generating code.

Phase 4: analysis of results

The main objective of this phase is to contrast the results obtained, that is, which are the most adequate tools to generate quality and functional code. An analysis was generated considering all the information obtained in the phase code evaluation.

RESULTS

This section details the results obtained in the different stages of the project development. Specific search strings were designed for each selected database using the previously defined terms. This approach ensured relevant coverage of the scope of the study.

Search Chains

To optimize the collection of relevant documents related to automatic code generation within the field of artificial intelligence, specific search strings were developed for each database, adjusting according to previously established terms (table 1).

|

Table 1. Search strings |

|

|

Database |

Search string |

|

Academic Search Ultimate |

TI ( ("artificial intelligence" OR "intelligent systems" OR ai) AND ("code generation" OR "automatic code creation" OR "automatic code development" OR "software autogeneration" OR "code assistants" OR "code generator" OR "automatic coding") ) OR AB ( ("artificial intelligence" OR "intelligent systems" OR ai) AND ("code generation" OR "automatic code creation" OR "automatic code development" OR "software autogeneration" OR "code assistants" OR "code generator" OR "automatic coding") ) OR KW ( ("artificial intelligence" OR "intelligent systems" OR ai) AND ("code generation" OR "automatic code creation" OR "automatic code development" OR "software autogeneration" OR "code assistants" OR "code generator" OR "automatic coding") ) |

|

ACM |

[[[Title: "artificial intelligence"] OR [Title: "intelligent systems"] OR [Title: ai]] AND [[Title: "code generation"] OR [Title: "automatic code creation"] OR [Title: "automatic code development"] OR [Title: "software autogeneration"] OR [Title: "code assistants"] OR [Title: "code generator"] OR [Title: "automatic coding"]]] OR [[[Abstract: "artificial intelligence"] OR [Abstract: "intelligent systems"] OR [Abstract: ai]] AND [[Abstract: "code generation"] OR [Abstract: "automatic code creation"] OR [Abstract: "automatic code development"] OR [Abstract: "software autogeneration"] OR [Abstract: "code assistants"] OR [Abstract: "code generator"] OR [Abstract: "automatic coding"]]] OR [[[Keywords: "artificial intelligence"] OR [Keywords: "intelligent systems"] OR [Keywords: ai]] AND [[Keywords: "code generation"] OR [Keywords: "automatic code creation"] OR [Keywords: "automatic code development"] OR [Keywords: "software autogeneration"] OR [Keywords: "code assistants"] OR [Keywords: "code generator"] OR [Keywords: "automatic coding"]]] |

|

Engineering source |

TI ( ("artificial intelligence" OR "intelligent systems" OR ai) AND ("code generation" OR "automatic code creation" OR "automatic code development" OR "software autogeneration" |

|

|

OR "code assistants" OR "code generator" OR "automatic coding") ) OR AB ( ("artificial intelligence" OR "intelligent systems" OR ai) AND ("code generation" OR "automatic code creation" OR "automatic code development" OR "software autogeneration" OR "code assistants" OR "code generator" OR "automatic coding") ) OR KW ( ("artificial intelligence" OR "intelligent systems" OR ai) AND ("code generation" OR "automatic code creation" OR "automatic code development" OR "software autogeneration" OR "code assistants" OR "code generator" OR "automatic coding") ) |

|

IEEE Xplore |

("Document Title":"artificial intelligence" OR "Document Title":"intelligent systems" OR "Document Title":ai) AND ("Document Title":"code generation" OR "Document Title":"automatic code creation" OR "Document Title":"automatic code development" OR "Document Title":"software autogeneration" OR "Document Title":"code assistants" OR "Document Title": "code generator" OR "Document Title":"automatic coding") OR ("Abstract":"artificial intelligence" OR "Abstract":"intelligent systems" OR "Abstract":ai) AND ("Abstract":"code generation" OR "Abstract":"automatic code creation" OR "Abstract":"automatic code development" OR "Abstract":"software autogeneration" OR "Abstract":"code assistants" OR "Abstract": "code generator" OR "Abstract":"automatic coding") OR ("Author Keywords":"artificial intelligence" OR "Author Keywords":"intelligent systems" OR "Author Keywords":ai) AND ("Author Keywords":"code generation" OR "Author Keywords":"automatic code creation" OR "Author Keywords":"automatic code development" OR "Author Keywords":"software autogeneration" OR "Author Keywords":"code assistants" OR "Author Keywords": "code generator" OR "Author Keywords":"automatic coding") |

|

Scopus |

TITLE-ABS-KEY ( ( "artificial intelligence" OR "intelligent systems" OR ai ) AND ( "code generation" OR "automatic code creation" OR "automatic code development" OR "software autogeneration" OR "code assistants" OR "code generator" OR "automatic coding" ) ) |

After executing the queries, 621 studies were obtained, of which 44 were finally selected.